#include <mikolov-rnnlm-lib.h>

Public Member Functions | |

| CRnnLM () | |

| ~CRnnLM () | |

| real | random (real min, real max) |

| void | setRnnLMFile (const std::string &str) |

| int | getHiddenLayerSize () const |

| void | setRandSeed (int newSeed) |

| int | getWordHash (const char *word) |

| void | readWord (char *word, FILE *fin) |

| int | searchVocab (const char *word) |

| void | saveWeights () |

| void | initNet () |

| void | goToDelimiter (int delim, FILE *fi) |

| void | restoreNet () |

| void | netReset () |

| void | computeNet (int last_word, int word) |

| void | copyHiddenLayerToInput () |

| void | matrixXvector (struct neuron *dest, struct neuron *srcvec, struct synapse *srcmatrix, int matrix_width, int from, int to, int from2, int to2, int type) |

| void | restoreContextFromVector (const std::vector< float > &context_in) |

| void | saveContextToVector (std::vector< float > *context_out) |

| float | computeConditionalLogprob (std::string current_word, const std::vector< std::string > &history_words, const std::vector< float > &context_in, std::vector< float > *context_out) |

| void | setUnkSym (const std::string &unk) |

| void | setUnkPenalty (const std::string &filename) |

| float | getUnkPenalty (const std::string &word) |

| bool | isUnk (const std::string &word) |

Public Attributes | |

| int | alpha_set |

| int | train_file_set |

Protected Member Functions | |

| void | sortVocab () |

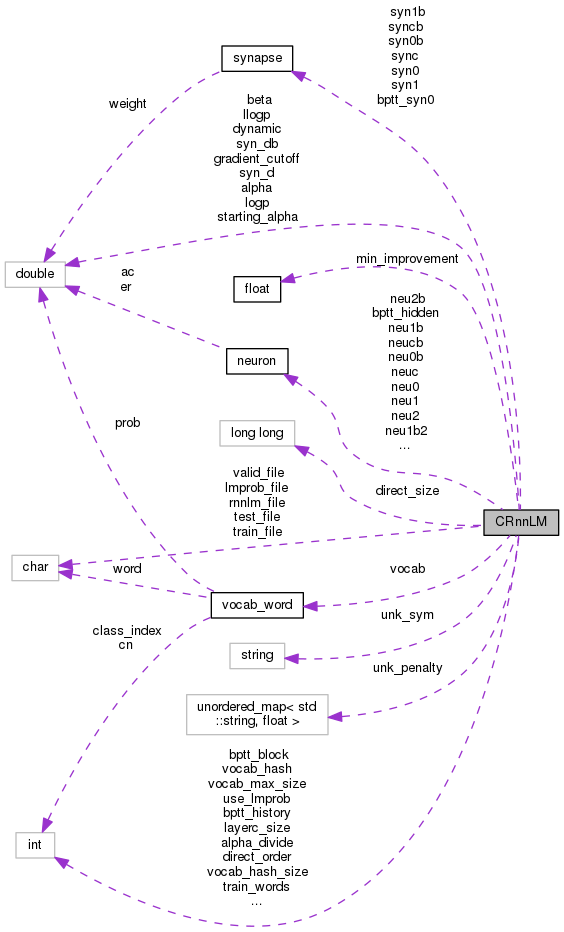

Definition at line 97 of file mikolov-rnnlm-lib.h.

| CRnnLM | ( | ) |

Definition at line 73 of file mikolov-rnnlm-lib.cc.

References CRnnLM::alpha, CRnnLM::alpha_divide, CRnnLM::alpha_set, CRnnLM::beta, CRnnLM::bptt, CRnnLM::bptt_block, CRnnLM::bptt_hidden, CRnnLM::bptt_history, CRnnLM::bptt_syn0, CRnnLM::class_size, CRnnLM::direct_order, CRnnLM::direct_size, CRnnLM::dynamic, CRnnLM::filetype, CRnnLM::gen, CRnnLM::gradient_cutoff, CRnnLM::independent, CRnnLM::iter, CRnnLM::layer1_size, CRnnLM::llogp, CRnnLM::logp, CRnnLM::min_improvement, CRnnLM::neu0, CRnnLM::neu0b, CRnnLM::neu1, CRnnLM::neu1b, CRnnLM::neu1b2, CRnnLM::neu2, CRnnLM::neu2b, CRnnLM::neuc, CRnnLM::neucb, CRnnLM::old_classes, CRnnLM::rand_seed, CRnnLM::rnnlm_file, CRnnLM::syn0, CRnnLM::syn0b, CRnnLM::syn1, CRnnLM::syn1b, CRnnLM::syn_d, CRnnLM::syn_db, CRnnLM::sync, CRnnLM::syncb, CRnnLM::test_file, rnnlm::TEXT, CRnnLM::train_file, CRnnLM::train_file_set, CRnnLM::train_words, CRnnLM::use_lmprob, CRnnLM::valid_file, CRnnLM::version, CRnnLM::vocab, CRnnLM::vocab_hash, CRnnLM::vocab_hash_size, CRnnLM::vocab_max_size, and CRnnLM::vocab_size.

| ~CRnnLM | ( | ) |

Definition at line 153 of file mikolov-rnnlm-lib.cc.

References CRnnLM::bptt_hidden, CRnnLM::bptt_history, CRnnLM::bptt_syn0, CRnnLM::class_cn, CRnnLM::class_max_cn, CRnnLM::class_size, CRnnLM::class_words, rnnlm::i, CRnnLM::neu0, CRnnLM::neu0b, CRnnLM::neu1, CRnnLM::neu1b, CRnnLM::neu1b2, CRnnLM::neu2, CRnnLM::neu2b, CRnnLM::neuc, CRnnLM::neucb, CRnnLM::syn0, CRnnLM::syn0b, CRnnLM::syn1, CRnnLM::syn1b, CRnnLM::syn_d, CRnnLM::syn_db, CRnnLM::sync, CRnnLM::syncb, CRnnLM::vocab, and CRnnLM::vocab_hash.

| float computeConditionalLogprob | ( | std::string | current_word, |

| const std::vector< std::string > & | history_words, | ||

| const std::vector< float > & | context_in, | ||

| std::vector< float > * | context_out | ||

| ) |

Definition at line 1140 of file mikolov-rnnlm-lib.cc.

References neuron::ac, CRnnLM::computeNet(), CRnnLM::copyHiddenLayerToInput(), CRnnLM::getUnkPenalty(), CRnnLM::history, rnnlm::i, CRnnLM::isUnk(), logprob, rnnlm::MAX_NGRAM_ORDER, CRnnLM::netReset(), CRnnLM::neu0, CRnnLM::neu2, CRnnLM::restoreContextFromVector(), CRnnLM::saveContextToVector(), CRnnLM::searchVocab(), CRnnLM::unk_sym, CRnnLM::vocab, and CRnnLM::vocab_size.

Referenced by KaldiRnnlmWrapper::GetLogProb().

| void computeNet | ( | int | last_word, |

| int | word | ||

| ) |

Definition at line 929 of file mikolov-rnnlm-lib.cc.

References neuron::ac, CRnnLM::class_cn, vocab_word::class_index, CRnnLM::class_words, CRnnLM::direct_order, CRnnLM::direct_size, FAST_EXP, CRnnLM::gen, CRnnLM::history, CRnnLM::layer0_size, CRnnLM::layer1_size, CRnnLM::layer2_size, CRnnLM::layerc_size, CRnnLM::matrixXvector(), rnnlm::MAX_NGRAM_ORDER, CRnnLM::neu0, CRnnLM::neu1, CRnnLM::neu2, CRnnLM::neuc, rnnlm::PRIMES, rnnlm::PRIMES_SIZE, CRnnLM::syn0, CRnnLM::syn1, CRnnLM::syn_d, CRnnLM::sync, CRnnLM::vocab, CRnnLM::vocab_size, and synapse::weight.

Referenced by CRnnLM::computeConditionalLogprob().

| void copyHiddenLayerToInput | ( | ) |

Definition at line 1117 of file mikolov-rnnlm-lib.cc.

References neuron::ac, CRnnLM::layer0_size, CRnnLM::layer1_size, CRnnLM::neu0, and CRnnLM::neu1.

Referenced by CRnnLM::computeConditionalLogprob(), and CRnnLM::netReset().

|

inline |

Definition at line 200 of file mikolov-rnnlm-lib.h.

| float getUnkPenalty | ( | const std::string & | word | ) |

Definition at line 1212 of file mikolov-rnnlm-lib.cc.

References CRnnLM::iter, and CRnnLM::unk_penalty.

Referenced by CRnnLM::computeConditionalLogprob().

| int getWordHash | ( | const char * | word | ) |

Definition at line 245 of file mikolov-rnnlm-lib.cc.

References CRnnLM::vocab_hash_size.

Referenced by CRnnLM::searchVocab().

| void goToDelimiter | ( | int | delim, |

| FILE * | fi | ||

| ) |

Definition at line 557 of file mikolov-rnnlm-lib.cc.

Referenced by CRnnLM::restoreNet().

| void initNet | ( | ) |

Definition at line 344 of file mikolov-rnnlm-lib.cc.

References neuron::ac, CRnnLM::bptt, CRnnLM::bptt_block, CRnnLM::bptt_hidden, CRnnLM::bptt_history, CRnnLM::bptt_syn0, CRnnLM::class_cn, vocab_word::class_index, CRnnLM::class_max_cn, CRnnLM::class_size, CRnnLM::class_words, vocab_word::cn, CRnnLM::direct_size, neuron::er, rnnlm::i, CRnnLM::layer0_size, CRnnLM::layer1_size, CRnnLM::layer2_size, CRnnLM::layerc_size, CRnnLM::neu0, CRnnLM::neu0b, CRnnLM::neu1, CRnnLM::neu1b, CRnnLM::neu1b2, CRnnLM::neu2, CRnnLM::neu2b, CRnnLM::neuc, CRnnLM::neucb, CRnnLM::old_classes, CRnnLM::random(), CRnnLM::saveWeights(), CRnnLM::syn0, CRnnLM::syn0b, CRnnLM::syn1, CRnnLM::syn1b, CRnnLM::syn_d, CRnnLM::sync, CRnnLM::syncb, CRnnLM::vocab, CRnnLM::vocab_size, and synapse::weight.

Referenced by CRnnLM::restoreNet().

| bool isUnk | ( | const std::string & | word | ) |

Definition at line 1201 of file mikolov-rnnlm-lib.cc.

References CRnnLM::searchVocab().

Referenced by CRnnLM::computeConditionalLogprob().

| void matrixXvector | ( | struct neuron * | dest, |

| struct neuron * | srcvec, | ||

| struct synapse * | srcmatrix, | ||

| int | matrix_width, | ||

| int | from, | ||

| int | to, | ||

| int | from2, | ||

| int | to2, | ||

| int | type | ||

| ) |

Definition at line 815 of file mikolov-rnnlm-lib.cc.

References neuron::ac, neuron::er, CRnnLM::gradient_cutoff, and synapse::weight.

Referenced by CRnnLM::computeNet().

| void netReset | ( | ) |

Definition at line 789 of file mikolov-rnnlm-lib.cc.

References neuron::ac, CRnnLM::bptt, CRnnLM::bptt_block, CRnnLM::bptt_hidden, CRnnLM::bptt_history, CRnnLM::copyHiddenLayerToInput(), neuron::er, CRnnLM::history, CRnnLM::layer1_size, rnnlm::MAX_NGRAM_ORDER, and CRnnLM::neu1.

Referenced by CRnnLM::computeConditionalLogprob().

| void readWord | ( | char * | word, |

| FILE * | fin | ||

| ) |

Definition at line 212 of file mikolov-rnnlm-lib.cc.

References MAX_STRING.

Referenced by CRnnLM::restoreNet().

| void restoreContextFromVector | ( | const std::vector< float > & | context_in | ) |

Definition at line 1125 of file mikolov-rnnlm-lib.cc.

References neuron::ac, rnnlm::i, CRnnLM::layer1_size, and CRnnLM::neu1.

Referenced by CRnnLM::computeConditionalLogprob().

| void restoreNet | ( | ) |

Definition at line 569 of file mikolov-rnnlm-lib.cc.

References neuron::ac, CRnnLM::alpha, CRnnLM::alpha_divide, CRnnLM::alpha_set, CRnnLM::anti_k, rnnlm::BINARY, CRnnLM::bptt, CRnnLM::bptt_block, CRnnLM::class_size, rnnlm::d, CRnnLM::direct_order, CRnnLM::direct_size, CRnnLM::filetype, CRnnLM::goToDelimiter(), CRnnLM::independent, CRnnLM::initNet(), CRnnLM::iter, CRnnLM::layer0_size, CRnnLM::layer1_size, CRnnLM::layer2_size, CRnnLM::layerc_size, CRnnLM::llogp, CRnnLM::logp, MAX_STRING, CRnnLM::neu0, CRnnLM::neu1, CRnnLM::old_classes, CRnnLM::readWord(), CRnnLM::rnnlm_file, CRnnLM::saveWeights(), CRnnLM::starting_alpha, CRnnLM::syn0, CRnnLM::syn1, CRnnLM::syn_d, CRnnLM::sync, rnnlm::TEXT, CRnnLM::train_cur_pos, CRnnLM::train_file, CRnnLM::train_file_set, CRnnLM::train_words, CRnnLM::valid_file, CRnnLM::version, CRnnLM::vocab, CRnnLM::vocab_max_size, CRnnLM::vocab_size, and synapse::weight.

Referenced by KaldiRnnlmWrapper::KaldiRnnlmWrapper().

| void saveContextToVector | ( | std::vector< float > * | context_out | ) |

Definition at line 1132 of file mikolov-rnnlm-lib.cc.

References neuron::ac, rnnlm::i, CRnnLM::layer1_size, and CRnnLM::neu1.

Referenced by CRnnLM::computeConditionalLogprob().

| void saveWeights | ( | ) |

Definition at line 292 of file mikolov-rnnlm-lib.cc.

References neuron::ac, neuron::er, CRnnLM::layer0_size, CRnnLM::layer1_size, CRnnLM::layer2_size, CRnnLM::layerc_size, CRnnLM::neu0, CRnnLM::neu0b, CRnnLM::neu1, CRnnLM::neu1b, CRnnLM::neu2, CRnnLM::neu2b, CRnnLM::neuc, CRnnLM::neucb, CRnnLM::syn0, CRnnLM::syn0b, CRnnLM::syn1, CRnnLM::syn1b, CRnnLM::sync, CRnnLM::syncb, and synapse::weight.

Referenced by CRnnLM::initNet(), and CRnnLM::restoreNet().

| int searchVocab | ( | const char * | word | ) |

Definition at line 257 of file mikolov-rnnlm-lib.cc.

References CRnnLM::getWordHash(), CRnnLM::vocab, CRnnLM::vocab_hash, and CRnnLM::vocab_size.

Referenced by CRnnLM::computeConditionalLogprob(), and CRnnLM::isUnk().

| void setRandSeed | ( | int | newSeed | ) |

Definition at line 207 of file mikolov-rnnlm-lib.cc.

References CRnnLM::rand_seed.

Referenced by KaldiRnnlmWrapper::KaldiRnnlmWrapper().

| void setRnnLMFile | ( | const std::string & | str | ) |

Definition at line 203 of file mikolov-rnnlm-lib.cc.

References CRnnLM::rnnlm_file.

Referenced by KaldiRnnlmWrapper::KaldiRnnlmWrapper().

| void setUnkPenalty | ( | const std::string & | filename | ) |

Definition at line 1220 of file mikolov-rnnlm-lib.cc.

References SequentialTableReader< Holder >::Done(), SequentialTableReader< Holder >::FreeCurrent(), SequentialTableReader< Holder >::Key(), SequentialTableReader< Holder >::Next(), CRnnLM::unk_penalty, and SequentialTableReader< Holder >::Value().

Referenced by KaldiRnnlmWrapper::KaldiRnnlmWrapper().

| void setUnkSym | ( | const std::string & | unk | ) |

Definition at line 1208 of file mikolov-rnnlm-lib.cc.

References CRnnLM::unk_sym.

Referenced by KaldiRnnlmWrapper::KaldiRnnlmWrapper().

|

protected |

Definition at line 276 of file mikolov-rnnlm-lib.cc.

References kaldi::swap(), CRnnLM::vocab, and CRnnLM::vocab_size.

|

protected |

Definition at line 114 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), and CRnnLM::restoreNet().

|

protected |

Definition at line 116 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), and CRnnLM::restoreNet().

| int alpha_set |

Definition at line 191 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), and CRnnLM::restoreNet().

|

protected |

Definition at line 126 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::restoreNet().

|

protected |

Definition at line 128 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM().

|

protected |

Definition at line 150 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::netReset(), and CRnnLM::restoreNet().

|

protected |

Definition at line 151 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::netReset(), and CRnnLM::restoreNet().

|

protected |

Definition at line 153 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::netReset(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 152 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::netReset(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 154 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), CRnnLM::initNet(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 132 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::computeNet(), CRnnLM::initNet(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 133 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::initNet(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 130 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::restoreNet(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 131 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::computeNet(), CRnnLM::initNet(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 124 of file mikolov-rnnlm-lib.h.

|

protected |

Definition at line 147 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::computeNet(), CRnnLM::CRnnLM(), and CRnnLM::restoreNet().

|

protected |

Definition at line 146 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::computeNet(), CRnnLM::CRnnLM(), CRnnLM::initNet(), and CRnnLM::restoreNet().

|

protected |

Definition at line 112 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM().

|

protected |

Definition at line 107 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), and CRnnLM::restoreNet().

|

protected |

Definition at line 156 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::computeNet(), and CRnnLM::CRnnLM().

|

protected |

Definition at line 110 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), and CRnnLM::matrixXvector().

|

protected |

Definition at line 148 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::computeConditionalLogprob(), CRnnLM::computeNet(), and CRnnLM::netReset().

|

protected |

Definition at line 158 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), and CRnnLM::restoreNet().

|

protected |

Definition at line 119 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), CRnnLM::getUnkPenalty(), and CRnnLM::restoreNet().

|

protected |

Definition at line 141 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::computeNet(), CRnnLM::copyHiddenLayerToInput(), CRnnLM::initNet(), CRnnLM::restoreNet(), and CRnnLM::saveWeights().

|

protected |

Definition at line 142 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::computeNet(), CRnnLM::copyHiddenLayerToInput(), CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::netReset(), CRnnLM::restoreContextFromVector(), CRnnLM::restoreNet(), CRnnLM::saveContextToVector(), and CRnnLM::saveWeights().

|

protected |

Definition at line 144 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::computeNet(), CRnnLM::initNet(), CRnnLM::restoreNet(), and CRnnLM::saveWeights().

|

protected |

Definition at line 143 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::computeNet(), CRnnLM::initNet(), CRnnLM::restoreNet(), and CRnnLM::saveWeights().

|

protected |

Definition at line 117 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), and CRnnLM::restoreNet().

|

protected |

Definition at line 103 of file mikolov-rnnlm-lib.h.

|

protected |

Definition at line 117 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), and CRnnLM::restoreNet().

|

protected |

Definition at line 118 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM().

|

protected |

Definition at line 160 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::computeConditionalLogprob(), CRnnLM::computeNet(), CRnnLM::copyHiddenLayerToInput(), CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::restoreNet(), CRnnLM::saveWeights(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 173 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::saveWeights(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 161 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::computeNet(), CRnnLM::copyHiddenLayerToInput(), CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::netReset(), CRnnLM::restoreContextFromVector(), CRnnLM::restoreNet(), CRnnLM::saveContextToVector(), CRnnLM::saveWeights(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 174 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::saveWeights(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 184 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), CRnnLM::initNet(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 163 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::computeConditionalLogprob(), CRnnLM::computeNet(), CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::saveWeights(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 176 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::saveWeights(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 162 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::computeNet(), CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::saveWeights(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 175 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::saveWeights(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 134 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), CRnnLM::initNet(), and CRnnLM::restoreNet().

|

protected |

Definition at line 105 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), and CRnnLM::setRandSeed().

|

protected |

Definition at line 102 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), CRnnLM::restoreNet(), and CRnnLM::setRnnLMFile().

|

protected |

Definition at line 115 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::restoreNet().

|

protected |

Definition at line 165 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::computeNet(), CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::restoreNet(), CRnnLM::saveWeights(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 178 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::saveWeights(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 166 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::computeNet(), CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::restoreNet(), CRnnLM::saveWeights(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 179 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::saveWeights(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 169 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::computeNet(), CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::restoreNet(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 181 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 168 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::computeNet(), CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::restoreNet(), CRnnLM::saveWeights(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 180 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::saveWeights(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 101 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM().

|

protected |

Definition at line 123 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::restoreNet().

|

protected |

Definition at line 99 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), and CRnnLM::restoreNet().

| int train_file_set |

Definition at line 191 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), and CRnnLM::restoreNet().

|

protected |

Definition at line 122 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), and CRnnLM::restoreNet().

|

protected |

Definition at line 186 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::getUnkPenalty(), and CRnnLM::setUnkPenalty().

|

protected |

Definition at line 187 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::computeConditionalLogprob(), and CRnnLM::setUnkSym().

|

protected |

Definition at line 109 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM().

|

protected |

Definition at line 100 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), and CRnnLM::restoreNet().

|

protected |

Definition at line 106 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), and CRnnLM::restoreNet().

|

protected |

Definition at line 136 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::computeConditionalLogprob(), CRnnLM::computeNet(), CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::restoreNet(), CRnnLM::searchVocab(), CRnnLM::sortVocab(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 138 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), CRnnLM::searchVocab(), and CRnnLM::~CRnnLM().

|

protected |

Definition at line 139 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), and CRnnLM::getWordHash().

|

protected |

Definition at line 120 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::CRnnLM(), and CRnnLM::restoreNet().

|

protected |

Definition at line 121 of file mikolov-rnnlm-lib.h.

Referenced by CRnnLM::computeConditionalLogprob(), CRnnLM::computeNet(), CRnnLM::CRnnLM(), CRnnLM::initNet(), CRnnLM::restoreNet(), CRnnLM::searchVocab(), and CRnnLM::sortVocab().